Kubernetes CRDs in Traefik: Overcoming the Limitations of Kubernetes Standardized Objects with Custom Resource Definitions

While Kubernetes provides built-in API objects and resources, the Kubernetes API can be extended to any object you like using Custom Resource Definitions (CRDs). This allows you to capture the subtleties of your use case and tap into the flexibility Kubernetes offers.

As container orchestration continues to gain traction, Kubernetes has become a (if not the) standard for Developers and DevOps engineers: they’re now used to declaring the desired state of their system instead of manually deploying software all over the world.

But how does one declare this desired state? And what if Kubernetes doesn’t fully capture the subtleties of your use case and you need to be a bit more descriptive than what it is aware of?

The goal of this post is to answer this very question. In the context of Ingress Management (how your cluster accepts and reacts to incoming requests), we’ll walk you through the standardized objects, use them with Traefik, understand their limitations, and show you how to overcome them. (Spoiler, we’ll use Traefik CRDs.)

Understanding Kubernetes Objects

In a world where APIs are more and more prevalent, it’s no wonder that Kubernetes introduced a way to describe how your cluster must handle incoming requests: the Ingress object.

But if you look at the bigger picture, other objects are involved in the process, let’s see which ones:

- Ingress: As we just said, Ingresses manage external access to services within a cluster. You’ll describe HTTP/HTTPS request patterns and which services are responsible for handling these.

- Pods: The smallest Kubernetes unit, and most of the time a single container (could be more). You can see a Pod as a running instance (replica) of your application.

- Services: Because Kubernetes decides where it deploys a Pod, and because it might kill and respawn a Pod based on the health, workload, and lifecycle of the whole system, each Pod has its own ephemeral ID. Services are a construct that provides a stable logical name for accessing the Pods.

- Deployments: Deployments are where you tell Kubernetes what containers you want to run in your Pods, and how many replicas you want it to run. There’s of course more to say, but for the sake of our tour, this is enough.

- ConfigMaps: ConfigMaps store non-sensitive, configuration-related data as key-value pairs. They allow you to decouple configuration data from container images, making managing and updating your applications easier.

- Secrets: Similar to ConfigMaps, Secrets store sensitive data such as passwords, tokens, and keys. They help protect sensitive information by allowing access control and keeping it separate from your container images and application code.

Each object serves a specific purpose and contributes to the overall architecture, but if you have a good understanding of the above concepts, you can already take full advantage of Kubernetes’ powerful features and create robust and scalable applications.

Using Kubernetes Ingress Object with Traefik

So if the Kubernetes Ingress Object describes the external access to your services, what handles it? The answer is the Ingress Controller, and Traefik is one of them (and we like to believe one of the best).

When you run Traefik on your K8S cluster, it reads the Ingress objects to understand the routing you want to achieve, then routes accordingly. It’s as simple as that.

Basic Example: TLS and Two Paths

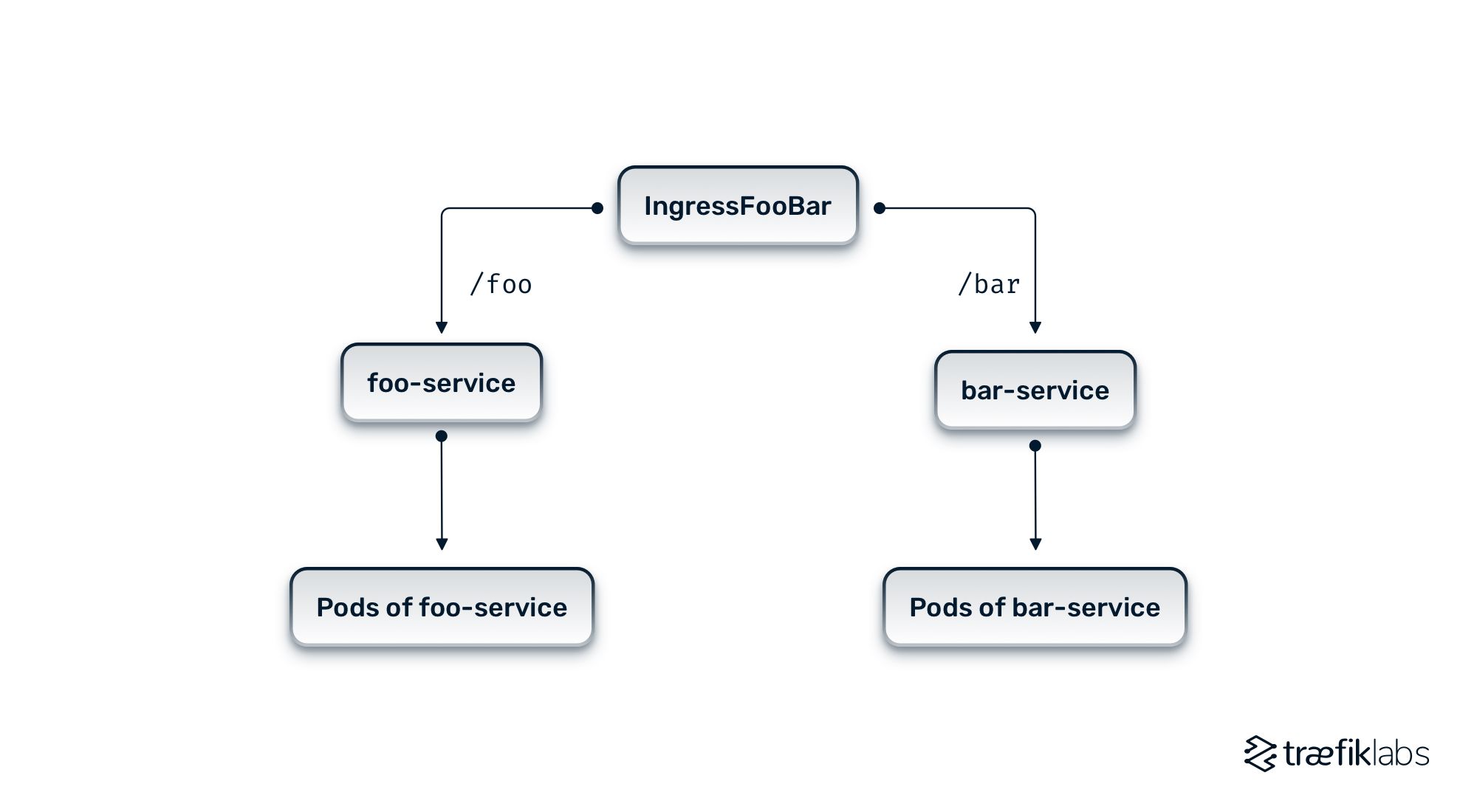

Let’s define two different paths (/foo, /bar), routing to two different services (foo-service, bar-service).

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: IngressFooBar

namespace: production

annotations:

kubernetes.io/ingress.class: traefik

spec:

tls:

- hosts:

- example.com

secretName: example-tls-secret

rules:

- host: example.com

http:

paths:

- path: /foo

pathType: Exact

backend:

service:

name: foo-service

port:

number: 80

- path: /bar

pathType: Exact

backend:

service:

name: bar-service

port:

number: 80

In the above example, you can see the Ingress resource with a specific host (example.com) and two paths. The first path, /foo, routes traffic to the backend service foo-service. The second path /bar routes traffic to the backend service bar-service.

Apart from the obvious, both services are listening on port 80. Additionally, the tls section specifies that TLS should be used for the host example.com and that the TLS certificate and private key are stored in a Secret named example-tls-secret.

To create this secret, you can run the command:

kubectl create secret tls example-tls-secret --cert=path/to/tls.cert --key=path/to/tls.key -n production

When you have simple needs, you need a simple solution, and we saw that Traefik is an excellent fit in that scenario. Traefik is fully compatible with the Ingress specification and will handle any request you can describe using that standard.

Limitations of Kubernetes Ingress

The premise of the Ingress object was to define a standard capable of expressing every routing need the user would face. Then, users would pick their Ingress Controller of choice. Unfortunately (or fortunately), there is more to routing than just paths, and chances are your ingress controller of choice can do (much) more than path-based routing, which is especially true if you’re used to full-featured solutions like Traefik.

These limitations include:

- Limited Routing Rules: As we said, the Ingress object, while offering basic routing rules based on the request host and path, falls short in providing advanced routing capabilities such as header-based routing or traffic splitting.

- HTTP Only: The primary function of the Ingress object is to route HTTP and HTTPS traffic. Support for other protocols, such as TCP and UDP, simply doesn’t exist.

- Limited to routing: the Ingress object does not include features that play along well with your ingress controller, like tracing, logging, rate limiting, circuit breakers, or many other use cases.

Annotations to the rescue

To support these extra features, Ingress Controller vendors started to leverage the annotation system in Kubernetes, where one can add any text information and attach it to the Ingress object. Unfortunately, no standard has emerged for these annotations, and these annotations themselves are limited:

- No validation: Because the annotations are “free text”, there is no safety net for users so that they can avoid typos or syntax errors while describing advanced features they want to attach.

- No standard: Each vendor has their own set of features and options, defeating the purpose of having a standard in the first place.

- Too broad: Because annotations are attached to the Ingress itself, it’s difficult to describe a feature on a finer-grained level (e.g., the path or the service).

Introducing Traefik Kubernetes CRD: A better alternative

So you chose Traefik for a reason, and that reason is you want to leverage every feature it has to offer.

Since annotations already force you to add specific configuration instructions that break the premise of compatibility with other ingress controllers and don’t overcome every limitation, you need a better alternative.

This is where CRDs (Custom Resource Definitions) come into play.

Not only Kubernetes has built-in objects, it also allows you to define your own, extending its description capabilities.

Traefik Kubernetes CRD (Custom Resource Definition) is a powerful tool that overcomes the Ingress specification limitation and allows for more options on top of providing a clear structure, for example:

- IngressRoute: This is the extended equivalent of the Ingress object. It adds support for various options, such as load balancing algorithms.

- Middleware: Middleware are a concept that you can mix and match for everything that goes beyond routing, whether it’s Access Control, header updates, circuit breakers, path manipulation, error control, redirection, and many others.

- TraefikService: Provides an abstraction for HTTP load balancing and mirroring. It allows you to define how traffic should be distributed among multiple services in your cluster.

- IngressRouteTCP & MiddlewareTCP: As their name implies, they allow you to define TCP routes and tweak the request in the process.

- IngressRouteUDP: Once again, adding support for a different protocol, this time UDP.

- TLSOptions: To fine-tune TLS connection parameters such as the minimum TLS version and the cipher suites that should be used.

- ServersTransport: To tweak communication between Traefik and the services.

To read more details about these CRDs, visit Traefik CRDs documentation.

Example: Achieving Rate Limiting with Traefik CRDs

Now that we know what Traefik CRDs are, let’s leverage them to add rate limiting to our example, setting up a rate limit of 30 average requests per second with a burst of 50 for a nodejs service, accessible through the example.com host.

In the process, we’ll also ask Traefik to automatically handle certificate generation using Let’s Encrypt, that is is a free, automated, and open Certificate Authority (CA) that provides digital certificates required for HTTPS.

apiVersion: v1

kind: Namespace

metadata:

name: nodejs

---

apiVersion: traefik.containo.us/v1alpha1

kind: Middleware

metadata:

name: prod-rate-limit

namespace: nodejs

spec:

rateLimit:

average: 30

burst: 50

---

apiVersion: v1

kind: Service

metadata:

name: nodejs

namespace: nodejs

spec:

selector:

app: nodejs

ports:

- name: http

protocol: TCP

port: 80

targetPort: 3000

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nodejs-deployment

namespace: nodejs

spec:

replicas: 1

selector:

matchLabels:

app: nodejs

template:

metadata:

labels:

app: nodejs

spec:

containers:

- name: nodejs

image: admintuts/expressjs-hello-world:latest

ports:

- containerPort: 3000

---

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: prod-route

namespace: nodejs

spec:

entryPoints:

- web

- websecure

routes:

- kind: Rule

match: Host(`example.com`)

services:

- name: nodejs

port: 80

middlewares:

- name: prod-rate-limit

tls:

certResolver: letsencrypt

To apply this configuration, save the above content into a YAML in a file, say nodejs-rate-limit.yaml, and apply it to your Kubernetes cluster with the following command:

kubectl apply -f nodejs-rate-limit.yaml

The command output will be:

middleware.traefik.containo.us/prod-rate-limit created

service/nodejs created

deployment.apps/nodejs-deployment created

ingressroute.traefik.containo.us/prod-route created

This will create the resources in your cluster, and a nodejs service will be subject to the rate limit rules defined by the prod-rate-limit middleware. Now you can test your setup, and you should observe that any requests beyond that limit are appropriately throttled.

Bonus: Testing Traefik Rate Limiting

To validate the rate limiting abilities of the configuration we applied above, we can use the hey tool, a popular HTTP benchmarking and load-testing utility. With hey, we can simulate multiple concurrent requests and observe how Traefik enforces the rate limits we have set. Assuming you have hey installed, let's run a test with the following command:

hey -n 100 -c 10 -q 10 http://example.com

Command output:

[...]

Status code distribution:

[200] 75 responses

[429] 25 responses

As you can see above, from our 100 requests made to the host, 25 requests were throttled, effectively proving that our rate limiting strategy is working as expected.

Let's break down the parameters used in the hey command:

- -n 100: Specifies the total number of requests to be made, in this case, 100.

- -c 10: Sets the number of concurrent connections, here we use 10 to simulate concurrent users.

- -q 10: Defines the maximum number of requests to be made per second, limiting the request rate to 10 per second.

- http://example.com: Specifies the URL endpoint we want to target with our requests.

By leveraging a Traefik Custom Resource Definition with the rateLimit configuration option and tools like hey, you can confidently design, test, and fine-tune your rate limiting strategy to achieve the desired level of control and protection for your applications.

Benefits of Using Traefik CRDs

Traefik CRDs deliver a host of benefits for your Ingress and API management. Here are a few reasons to incorporate them into your workflow:

- Flexibility: With Traefik CRDs, you get unparalleled flexibility and fine-grained control over your Ingress traffic. Leverage advanced routing, traffic management, and security features tailored to your bespoke application requirements.

- Compatibility: Traefik CRDs work seamlessly with Traefik’s ecosystem of tools and features, making them an ideal fit for your existing Traefik setup.

- Advanced Use Cases: Traefik CRDs enable you to implement advanced use cases, such as Canary Deployments, A/B testing, and rate limiting that the standard Kubernetes Ingress object may not support. This allows you to implement complex traffic management strategies to ensure smooth application operation.

- Ease of Use: It's easy to define, configure, and manage with standard Kubernetes manifest files, following the same declarative approach as other Kubernetes resources.

Wrapping It Up

In this article, we delved into Kubernetes CRDs in Traefik. We discussed Kubernetes Ingress limitations and how Traefik CRDs overcome these, providing a practical rate limiting example. We highlighted the benefits of Traefik CRDs, including their flexibility and compatibility. Whether you're a software developer or a DevOps engineer, we encourage you to explore the power of Traefik CRDs in your Kubernetes deployments for a more robust and scalable application infrastructure. Remember, the Traefik community is always here to support you.

In addition, CRDs are not only used in Traefik Proxy. Our recent product Traefik Hub, a Kubernetes-native API Management solution that simplifies API publishing, security, and management, also leverages native Kubernetes constructs, like CRDs, labels, and selectors. It is also fully GitOps compliant providing quick time to value and increased productivity for users. In an upcoming article, we will cover how to use CRDs in Traefik Hub to simplify API Management. In the meantime, you can start your Traefik Hub trial here.